Learn how we are exploring the potential of synthetic data to improve automatic speech recognition for low-resource African languages

Africa is home to over 2,300 languages, and the majority of them are unsupported by both automatic speech recognition (ASR) to transcribe speech and speech synthesis to generate it. Developing speech technologies requires voice datasets: collections of voice recordings, their transcriptions, and accompanying metadata. While thousands of hours of speech data exist for global majority languages like English or French—enabling the development of high-quality speech models—for many low-resource languages, the data needed to develop functional models is scarce or altogether nonexistent.

So far, the approach to building speech technology in those languages has been to go out, collect data from speakers of unsupported languages, and train models using that data. These conventional methods rely on substantial efforts to collect human voice data at scale, which comes with significant cost implications. At best, estimates suggest a cost of US$100-150 per hour of voice data collected. While collecting 5-10 hours of human speech from a single speaker for text-to-speech (TTS) development may be within closer reach, the cost of collecting the hundreds of hours of diverse speech needed to train a quality ASR model—and to do this for the hundreds of as-yet unsupported languages—remains prohibitive, even amid increasing investment in AI for development.

Speech technology holds great promise for providing a more inclusive digital experience, especially for vulnerable groups. The digital divide between globally dominant languages whose speakers benefit from speech technologies in everyday life and over 2,000 African languages currently unsupported by comparable tools is stark. This divide becomes even more pronounced in times of crisis. For example, a multilingual, voice-enabled chatbot providing information about how and where to access healthcare during a humanitarian emergency could be life-saving for community members with low literacy or disabilities, especially those who speak languages different from those used by service providers. In the absence of such tools, the most vulnerable members of society risk being excluded from access to vital information.

We advocate for increased investment in voice data collection to support African language technology. At the same time, we recognize that existing resources are insufficient to enable functional speech technology for most African languages, especially when considering the broader components of a complete language AI solution like machine translation or (large) language models. The current landscape calls for innovative solutions to accelerate progress in African language technology at scale.

Our approach

As part of a grant funded by the Gates Foundation, CLEAR Global and Dimagi partnered to test an experimental approach to creating and improving ASR for African languages at a fraction of the cost typically required by resource-intensive voice data collection initiatives.

We began with the hypothesis that it is possible to create sentences in many African languages using large language models (LLMs)—such as GPT and Claude—and asked: can these synthetic sentences be used to create synthetic voice data of sufficient quality to improve ASR models? What are the potential risks and rewards?

We approached this project with optimism, given promising research on the use of synthetic data to enhance speech technology in major languages. Results show the potential of this approach to reduce word error rate (the proportion of incorrectly transcribed words) by 9-15% in one example for English, and by a similar margin (around 15%) for another example for Turkish. However, while much of this earlier research is motivated by concerns that all readily available human data (most of which is in majority languages) has already been leveraged for AI development, our motivation differs: we are addressing the scarcity of data for many African languages.

We outline the process, results, and implications in the sections that follow. For more detail and to reproduce these results, see the research paper, models, and code.

The process

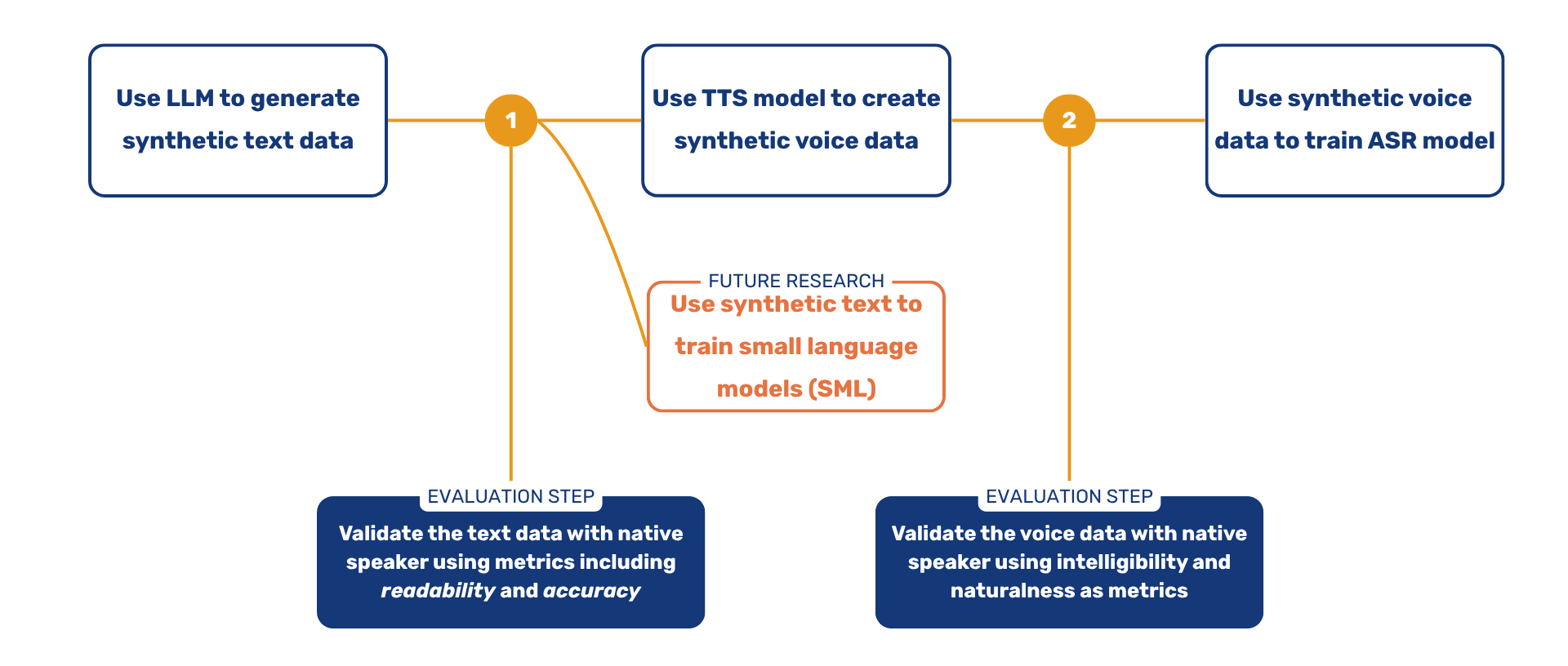

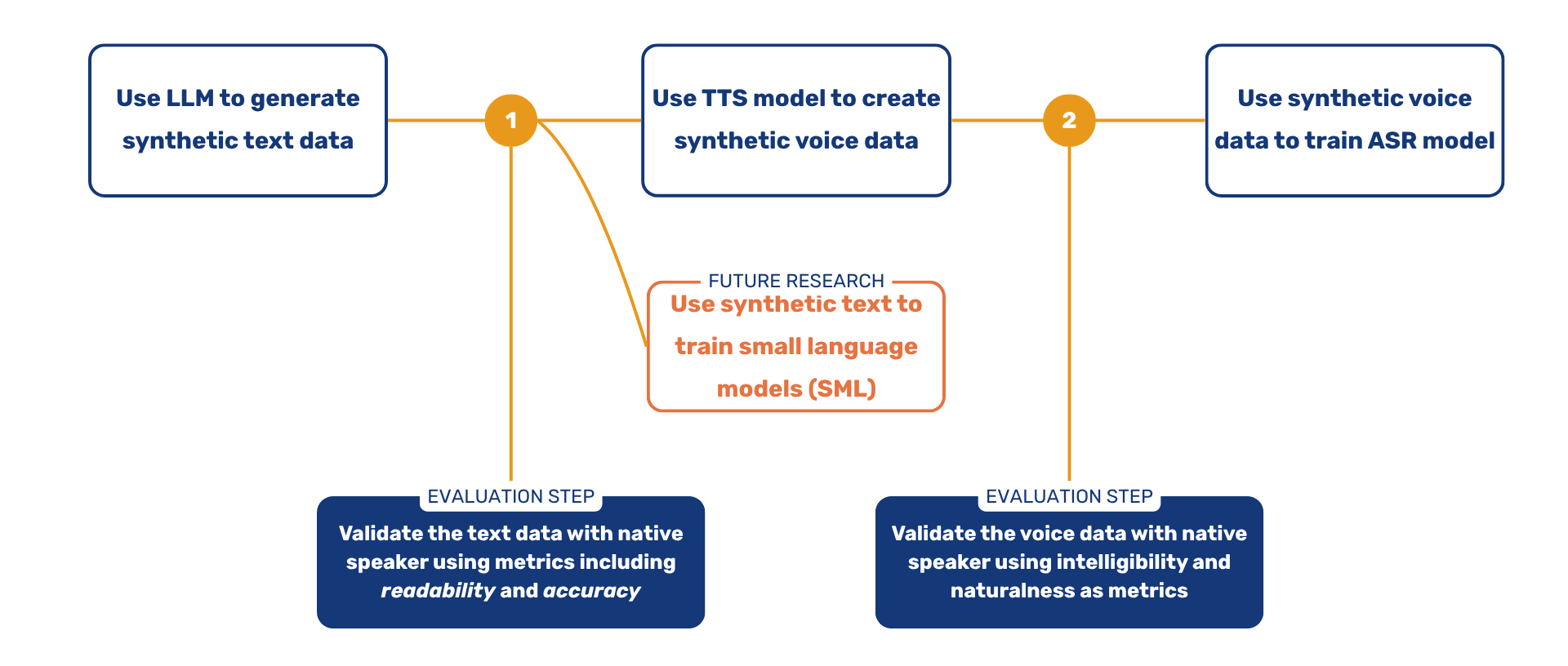

Our process has three key steps:

- Generate and evaluate synthetic text using an LLM

- Generate and evaluate synthetic voice data with a Text-to-Speech (TTS) model based on that text

- Fine-tune an Automatic Speech Recognition (ASR) model with different ratios of human and synthetic voice data, and evaluate performance

Overview of the process to create synthetic voice data

We describe the process step by step in the following sections. If you are mainly interested in the outcomes, skip ahead to the results section.

Step 1: Creating synthetic text in 10 African languages

Using LLMs, we generated and evaluated synthetic text for 10 African languages. Language selection was based on regional and linguistic diversity, speaker population, and practical factors like access to human evaluators and availability of TTS models and speech datasets needed for later steps.

| Language | Estimated L1 Speaker Population | Language Family | Region |

|---|---|---|---|

| Hausa | 50,000,000 | Chadic | West Africa |

| Northern Somali | 22,000,000 | Cushitic | East Africa |

| Yoruba | 54,000,000 | Niger-Congo (Volta-Niger) | West Africa |

| Wolof | 5,500,000 | Niger-Congo (Atlantic) | West Africa |

| Chichewa | 9,700,000 | Bantu | Southern Africa |

| Dholuo | 5,000,000 | Nilotic | East Africa |

| Kanuri | 9,600,000 | Saharan | West-Central Africa |

| Twi | 9,000,000 | Kwa | West Africa |

| Kinande | 10,000,000 | Bantu | Central Africa |

| Bambara | 10,000,000 | Niger-Congo (Mande) | West Africa |

Languages for which we created and evaluated synthetic text data

Here’s how we created the text datasets:

- We prompted LLMs to generate short, simple sentences and questions in the target language, along with English translations. We also added a topic for the synthetic text. We used 34 different themes: 17 aligned with the UN Sustainable Development Goals and 17 covering the most common topics of the FLORES/FLEURS evaluation datasets which we used later.

- For each language, we generated 1,200 sentences across various (usually three) LLMs.

- Human evaluators from the Translators without Borders (TWB) Community evaluated these sentences over two rounds on the basis of five key metrics (Readability and Naturalness, Grammatical Correctness, Real Words, Notable Error, Adequacy and Accuracy) intended to capture quality of the sentences and understanding.

- We used the evaluation results to identify the best LLM configuration per language, prioritizing those with the highest average Readability and Naturalness scores.

- For the three languages selected for voice data generation and ASR fine-tuning, we then used the top-performing LLM to generate a large corpus.

See here the synthetic text datasets.

Step 2: Creating synthetic voice data in 3 African languages

For step 2, we selected 3 of the 10 languages evaluated under step 1—Hausa, Dholuo, and Chichewa—owing to text data of sufficient quality having been generated under step 1, as well as the availability of voice data for fine-tuning TTS and ASR models under steps 2 and 3.

We created the voice datasets following this process:

- We fine-tuned various TTS models (including XTTS-v2, YourTTS and a modified version of Bible TTS) with the open.bible corpus. We also used Meta’s MMS model and the original BibleTTS model.

- We evaluated 337 synthetic audio samples per model with native speakers from the Translators without Borders (TWB) community on two metrics (Intelligibility and Naturalness).

- Based on the results of this evaluation, we created around 500 hours of synthetic voice data per language with our fine-tuned YourTTS model. We also created synthetic voice data for Hausa with the transformer-based XTTS model. The synthetic voice datasets can be accessed here: Hausa, Dholuo, Chichewa.

The fine-tuned TTS models are available here: Hausa, Dholuo, Chichewa.

Step 3: Fine-tuning and evaluating automatic speech recognition models in 3 African languages

While over 500 hours of human voice data have been published in Hausa as part of the NaijaVoices project, there is substantially less voice data available in the public domain for Chichewa (34 hours) and Dholuo (19 hours). This presented the opportunity to define two scenarios for step 3: a medium-data scenario for Hausa, and a low-data scenario for Chichewa and Dholuo.

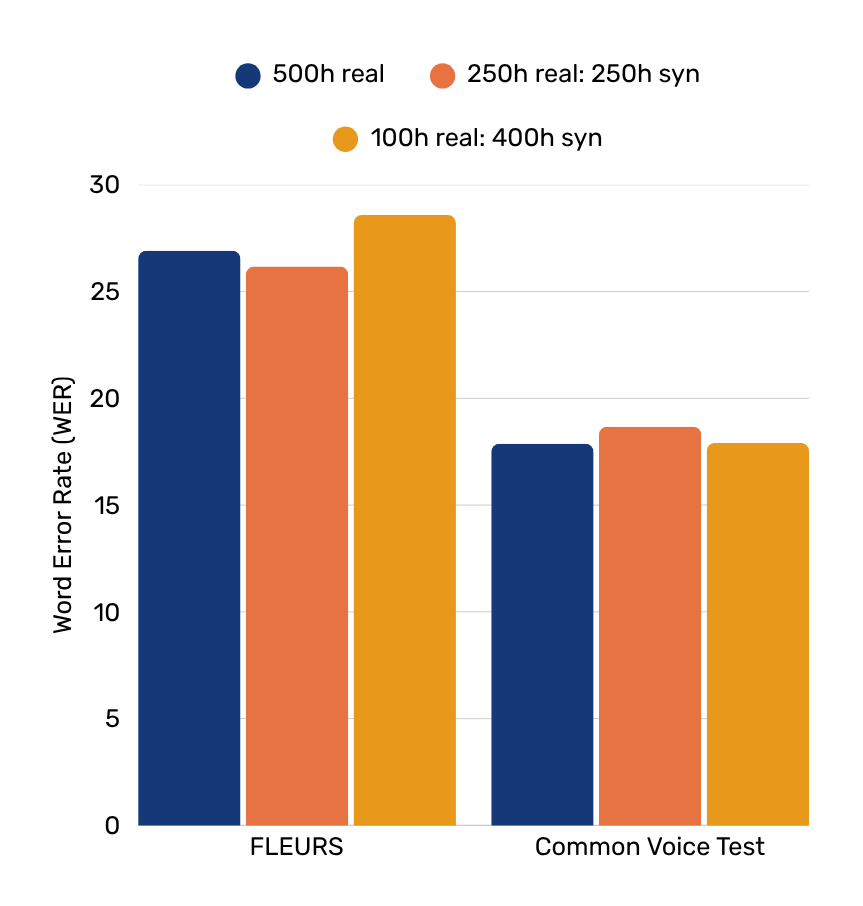

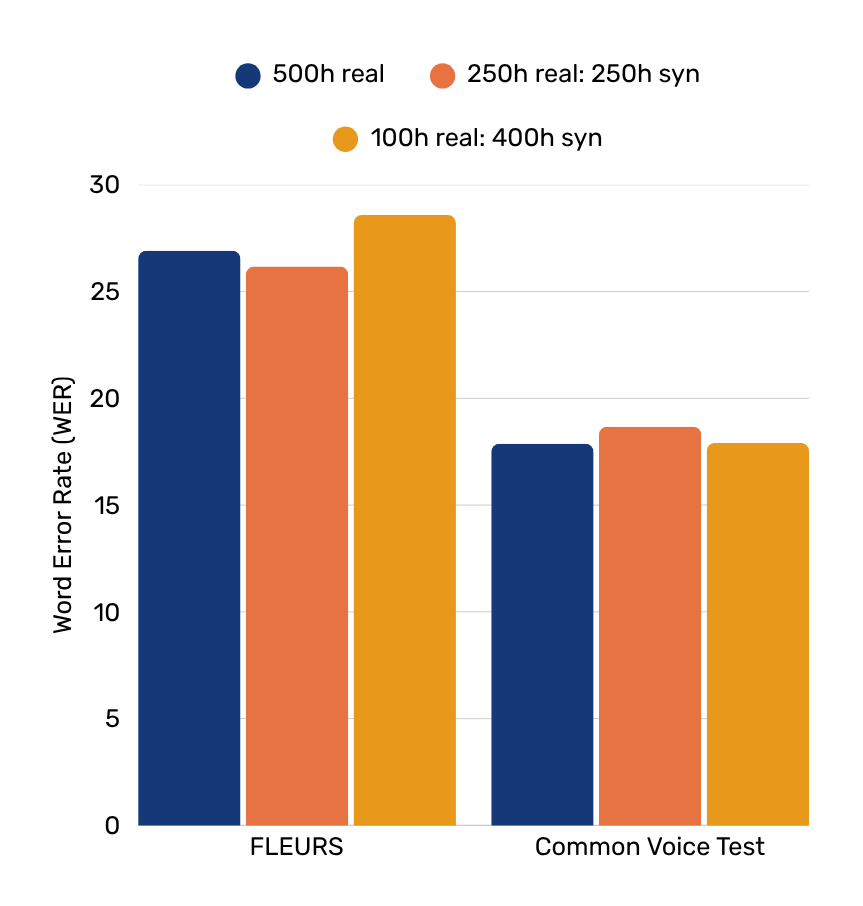

For Hausa, we wanted to investigate whether synthetic voice data can replace human data without compromising performance. To do this, we kept the total size of the training data corpus constant at 500h but varied the ratio between real and synthetic data (i.e. 500h of real data, plus real-synthetic data ratios of 250h:250h and 100h:400h). We also ruled out the case that the ASR models saturate at a given number of hours of human data by training the same models on smaller quantities of human data (100h and 250h). We evaluated ASR performance on three test sets (NaijaVoices, Common Voice, FLEURS).

The fine-tuned models for Hausa can be found here: Hausa w2v-BERT-2.0 models

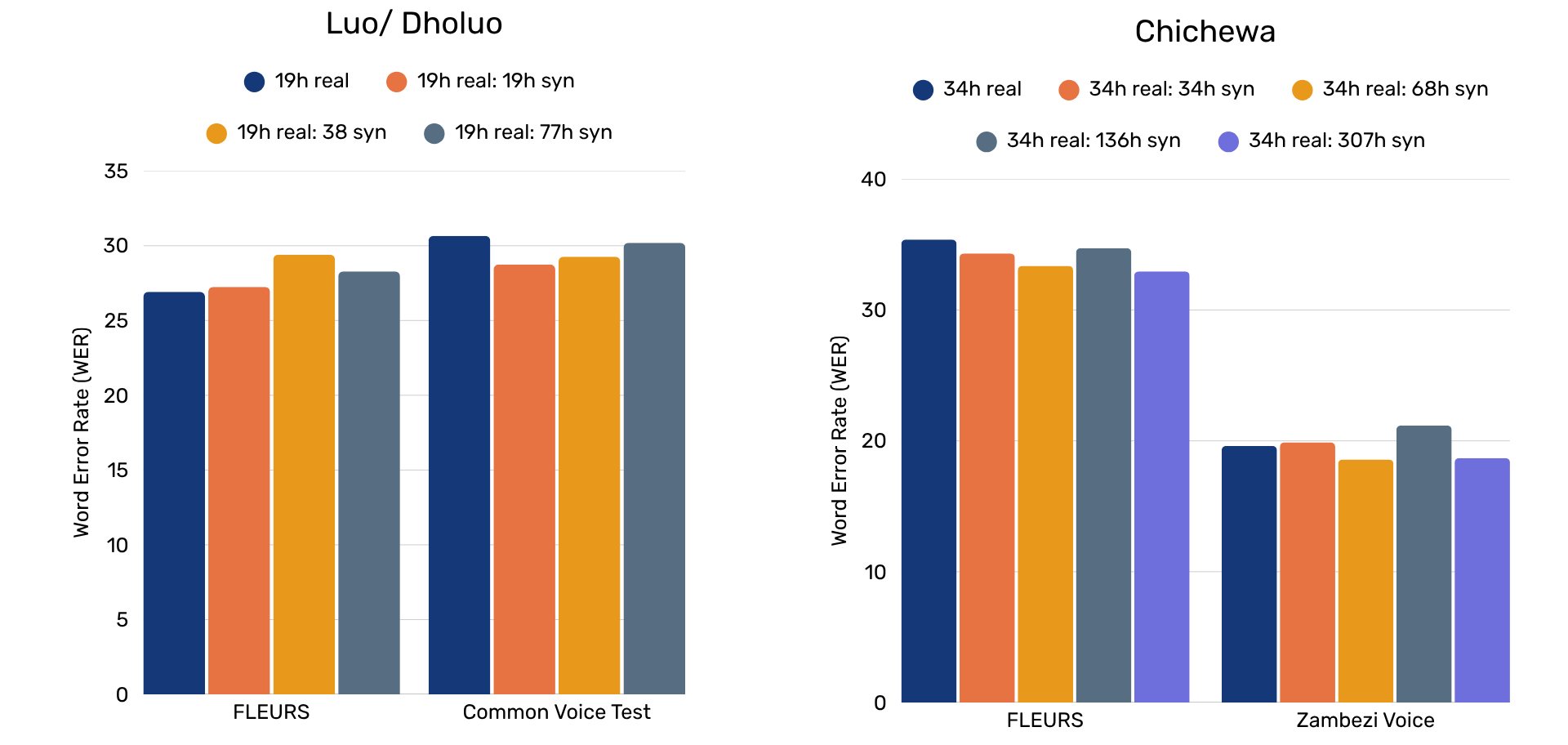

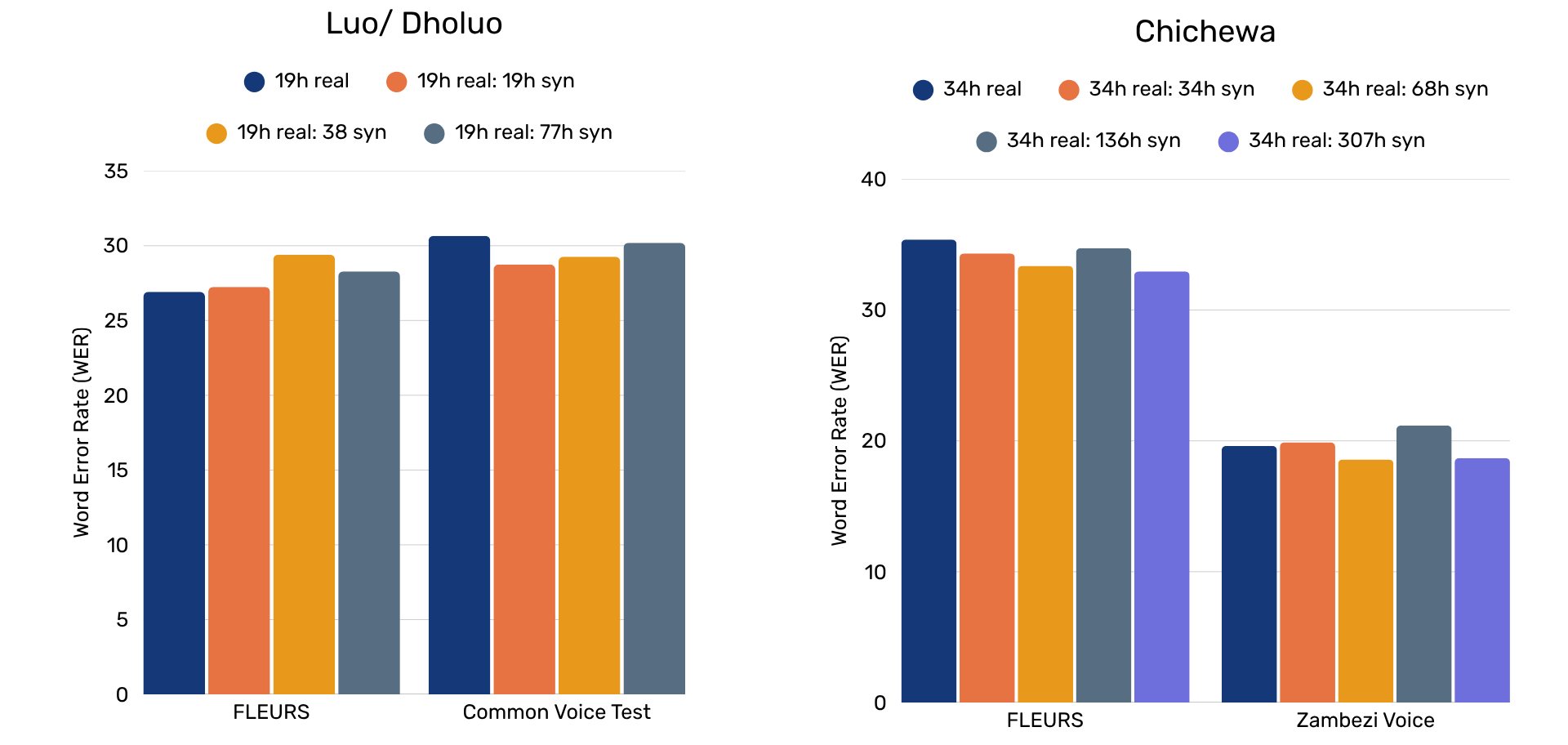

For Chichewa and Dholuo, a less favorable quantity of data was available–if likely more representative of the majority of African languages. For those two languages, we in contrast to Hausa wanted to see whether we can improve ASR performance by adding increasing amounts of synthetic data to the training corpus. In this scenario, we trained ASR models on just the human data available and 1:1, 1:2, and 1:4 ratios of human-synthetic data, as well as a 1:9 ratio for Chichewa given some indications of improvement. We evaluated ASR performance on two tests for each language (FLEURS and Zambezi Voice for Chichewa; FLEURS and Common Voice for Dholuo).

The fine-tuned models can be found here: Chichewa w2v-BERT 2.0 models, Dholuo w2v-BERT 2.0 models

Results

Synthetic text

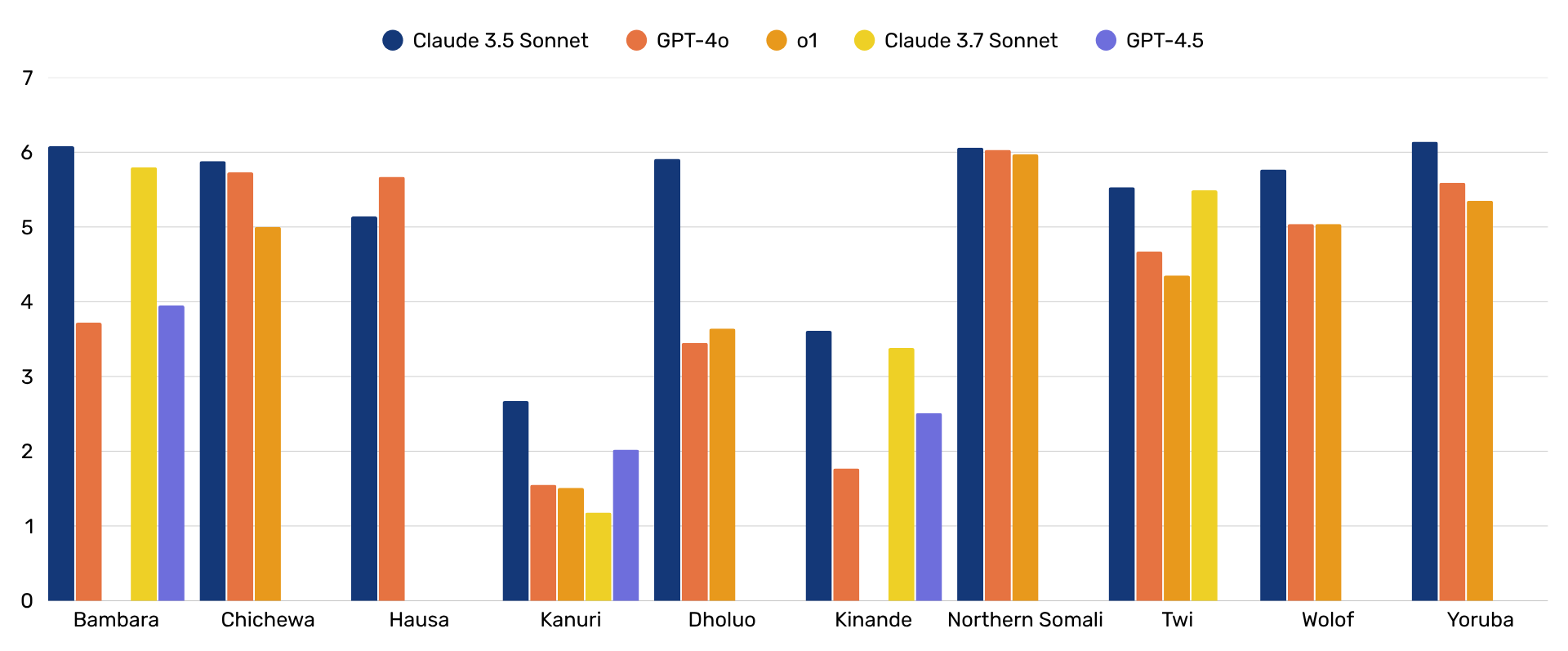

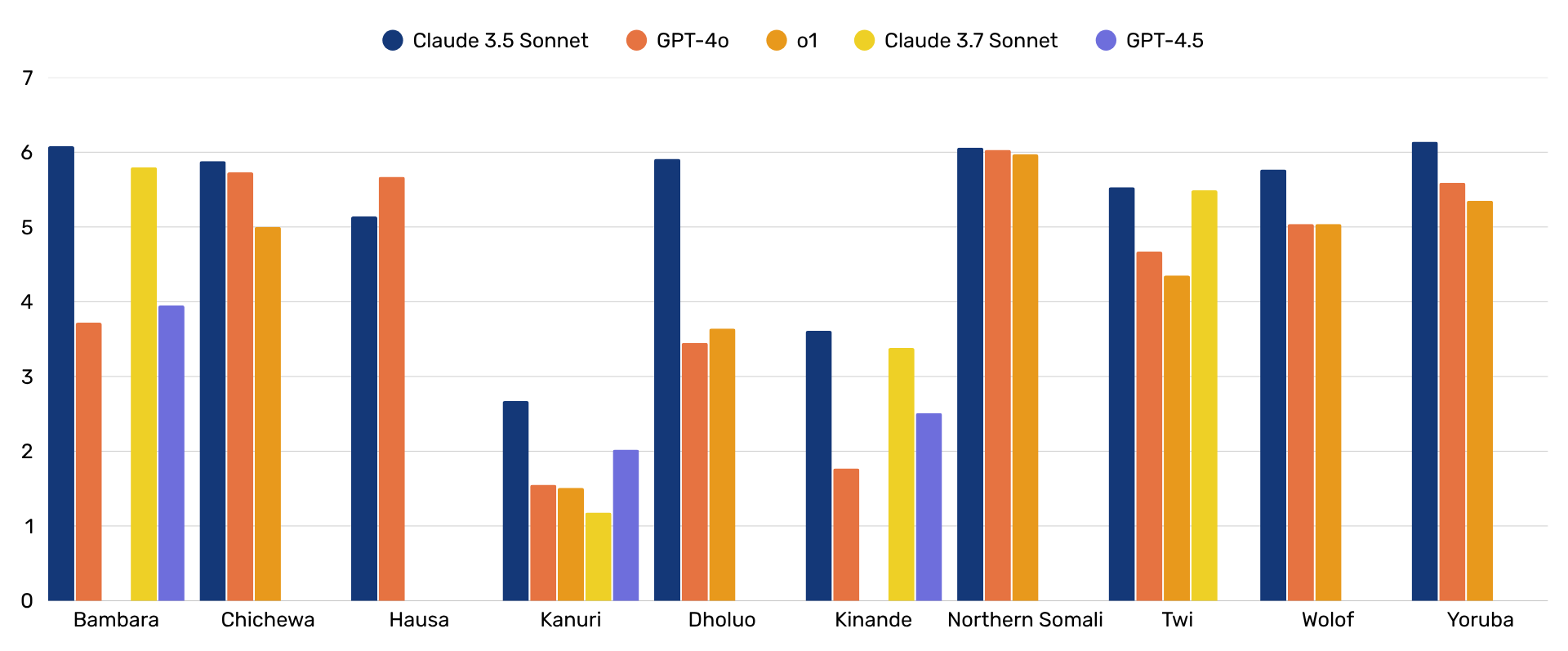

The first key result of this work is the evaluation of the quality of text generated by LLMs under step 1 of our process. The graph below compares mean readability scores on a seven-point scale across languages, including the results of smaller-scale generations and evaluation for Yemba and Ewondo. 8 out of 12 languages achieved mean rating above 5.0 which we took as the threshold to show sufficient quality for use to generate synthetic voice data under subsequent steps. The discrepancy between low-resource languages and very low-resource languages–here Kanuri, Kinande, Yemba, and Ewondo–is nonetheless stark. This was reinforced by evaluators for these languages who reported a range of issues including generation of sentences in an altogether different language; GPT-4o prompted to generate Kanuri sentences, for instance, was found to largely produce sentences in Hausa.

Comparison of readability [1..7] scores for synthetic text generated by various LLMs for 10 African languages

Synthetic voice data and improving ASR models

Hausa: Medium-data scenario

For Hausa, we kept the total amount of training data constant but varied the ratio between real and synthetic data. For this reason, we would expect to see similar performance, with the only difference that some of the training data is synthetic.

Given the synthetic voice data we generated was exclusively male (because there are only male speakers in the Bible corpus), we were concerned that the ASR models trained on the synthetic voice data would perform worse for women. With the CommonVoice and NaijaVoices dataset, we could evaluate this: On average, the fine-tuned ASR models in fact perform slightly worse for male than female voices.

Usually, models get better when trained on more data. And we found the same: Our best models were still those trained on all the data we had available, i.e. all human data and all synthetic data that we created, but the improvements are minor.

Dholuo and Chichewa: Low-data scenario

In the low-data scenario with Chichewa and Dholuo, we added ever more data to the training corpus and therefore would expect that performance improves as we add more data. In summary, this is unfortunately not what we found. There are minor improvements when adding the same amount of synthetic data as real data was available (i.e. 19 hours real: 19h synthetic and 34 hours real:34 hours synthetic), but there are no further improvements beyond this.

For Dholuo, they also depend on the evaluation data. When we evaluated with FLEURS, no amount of synthetic data yielded any improvement. When we evaluated with the CommonVoice evaluation data, the 1:1 ratio was around 6.5% better.

Chichewa showed some more promising results. A 1:1 ratio but also a 1:9 ratio resulted in some improvements of around 6.5%.

Challenges in evaluation

The human evaluators and us also encountered various challenges when evaluating the data and models. We discuss them in further detail in our research paper, but they are important to keep in mind for anyone who would like to implement similar projects.

For example, we found that human evaluators often disagree, so multiple evaluators are needed. Our investigations indicate that this might stem from the fact that LLMs, when prompted to generate text in very low-resource languages, often instead generate text in other larger regional languages (e.g. Hausa when prompted for Kanuri). Without good measures to monitor this and close collaboration with the human evaluators, it might happen that the better performance in that larger regional language is evaluated instead of the incapacity to generate text in the very low-resource language.

We also looked a bit close at the ASR evaluation datasets. A full investigation of this was beyond the scope of our project, but we asked human evaluators to check the most common errors which the ASR models committed. Many of those errors were not errors, but rather different but equally legitimate ways of writing the same word. Many of the languages with which we worked do not have standardised scripts, and therefore there is not a single correct way of writing many words. In addition, our human evaluators also flagged errors in the evaluation data itself. We will need better evaluation data for African languages if we want to properly understand how well our language AI performs.

Beyond this, we provide much more detail behind these results in the research paper, including the results of supplementary analyses providing insights into agreement between human evaluators, approaches to fine-tuning LLMs for text generation, duplication challenges for large quantity synthetic text generation, and the examples of ASR evaluation challenges due to non-standardized scripts and errors in the evaluation data.

Summary

Our research provides several promising insights and recommendations for others looking to further develop or replicate this work in other contexts:

Synthetic text generation by LLMs following our process yields promising results in medium- and low-resource languages: evaluators rated readability of sentences generated above our minimum quality threshold set for feasibility for use for synthetic voice data generation in the majority of languages investigated. While Claude 3.5 outperformed OpenAI’s GPT-4o in 8 out of 10 languages, the best-performing LLM in a target language is challenging to determine beforehand. Human evaluation across models is a key step in this process.

Synthetic voice data can be generated at a fraction of the cost of human voice data collection: we estimate that we created high-quality synthetic voice data for Hausa, Dholuo, and Chichewa at less than 1% of the cost of human data.

Synthetic voice data has potential to complement human data in improving ASR performance and improve cost efficiencies by reducing the amount of human data required–but we only see evidence of this when a minimum of human data is available.

At the same time, we discovered several key limitations:

Synthetic text generation by LLMs is poor for the lowest-resource languages: while we were able to create a synthetic text corpus in Hausa resulting in a synthetic voice data corpus that evaluators suggested anecdotally was mistakable for a real speaker, for languages like Kanuri and Kinande, text outputs were highly flawed and insufficient to use in further stages of the pipeline. To remedy this, there likely won’t be a way around digitalizing at least a minimum amount of their languages to support those languages and their communities of speakers.

The Word Error Rates achieved are unlikely to be useful in practical application: a Word Error Rate (WER) of >20%, such as those seen across languages here, is unlikely to be immediately useful in application. While our work highlighted the potential shortcomings of standard automatic metrics like Word Error Rate/Character Error Rate scores for low-resource languages with non-standardized scripts, even the most performant ASR models were unable to come close to the WER of 10% commonly seen as desirable to imply real-world usability.

What This Means and Looking Forward

We think that these results are a first step, and a promising one. Someone building an ASR model with only 250h of voice data available could create synthetic data and improve their ASR model to a level otherwise only achievable with twice as much data. However, it might be that this ASR model still is insufficient to use in application and to create the more inclusive digital services that we are aiming for.

To achieve this, more experimentation and collaboration will be needed. An improved version of our approach perhaps in combination with a better ASR model architecture and high quality data might create technology that is sufficient to be used for those services. We think that our results indicate that synthetic data can play a role in creating language AI for all African languages, and that we should continue to investigate alternative and complementary ideas to achieve this goal. Synthetic data, however, won’t entirely replace the need for further efforts to collect data and improve models for African languages.

This project is part of a growing effort among researchers, practitioners, and communities working toward a future where language technologies are truly inclusive and empower people to get information and be heard, whatever language they speak. If you have thoughts, we welcome your feedback, ideas, and collaboration as we collectively work toward more equitable language technologies.

Interested to hear more about this work? Contact us.

This blog is based on research funded by the Gates Foundation. The findings and conclusions contained within are those of the authors and do not necessarily reflect positions or policies of the Gates Foundation.

With thanks to our partner Dimagi.

Dimagi is a global social enterprise that powers impactful frontline work through digital solutions and services. Since 2002, Dimagi has worked to create a world where everyone has access to the services they need to thrive. CommCare, Dimagi’s flagship offering, is the most widely-deployed digital platform for frontline workers.